- Use find utility to search contents of files with grep, and archive the resulting files

- Read a list of files in Vim

- Edit PDF metadata programmatically

- Reset an unresponsive baseboard management controller remotely

- Block scam emails claiming to be you

- Determine view count from particular IP address in server logs

- Read the New York Times without subscribing

- Create contact sheet with ImageMagick including EXIF information

- Split gigantic text file into pages based on user-specified record separator

- Test email deliverability with OpenSSL instead of Telnet

- Create daily backups of your email server and delete backups older than 7 days, then upload today's backup to your cloud

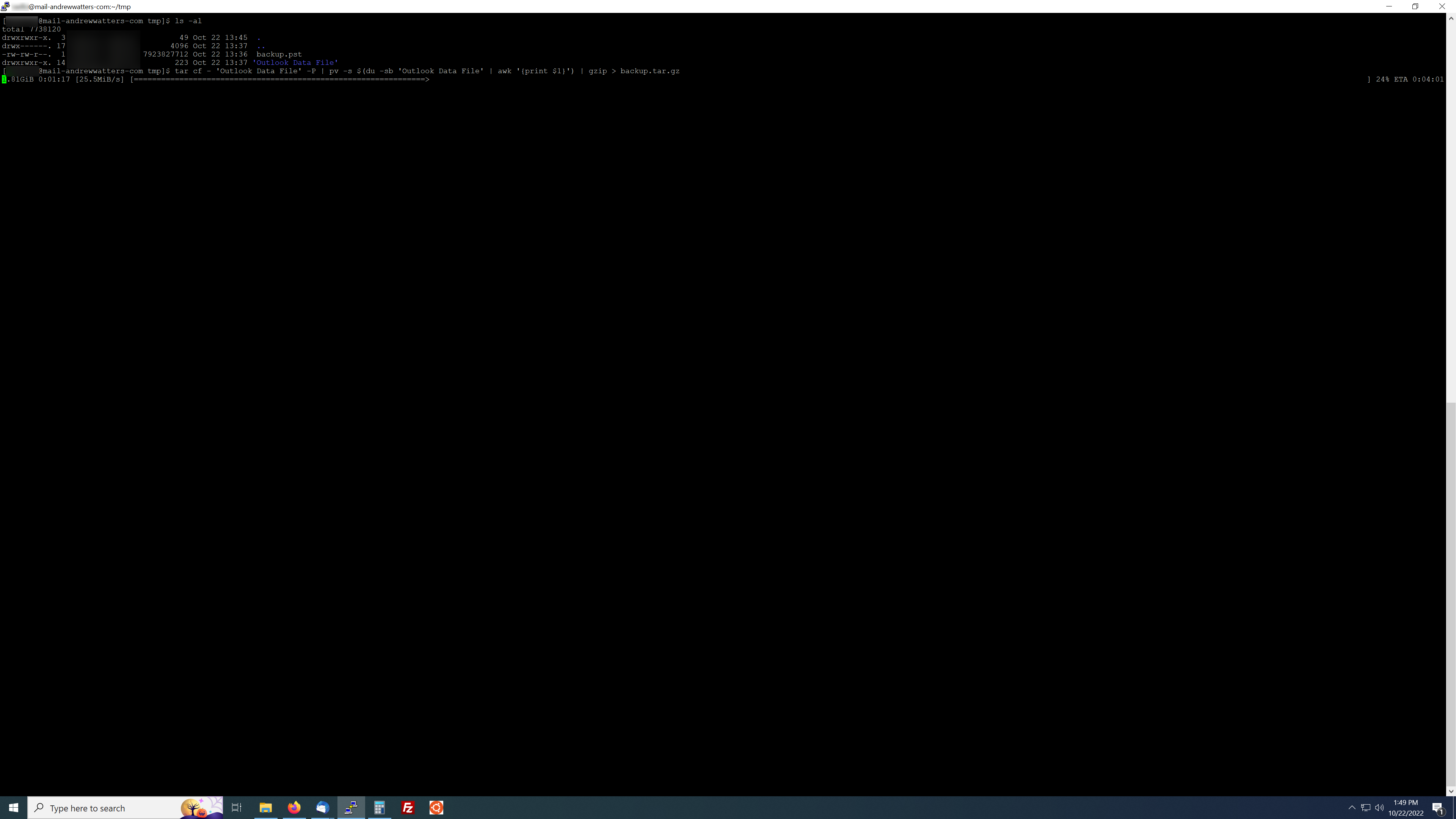

- Use Pipe Viewer to see progress on .tar.gz archives

- Extract frames from high resolution video as PNG stills with timestamps

- Convert HTML tags to HTML entities in code blocks

- Create auto-updating table of contents in any HTML/PHP file 🔥

- Prefer IPv4 when sending email with Postfix

- Restrict SSH logins to specific users only

- Configure Fail2Ban to reject all users who fail to log in when trying to send email

- Configure Postfix to reject all private sector .ru domains

- Search Maildir for particular string in emails

- Create sitemap for 404 page using command line tools 🔥

- Mass edit files in place with changes to script embedded in file

- Embed DDOS-proof web hit counter in web page

- Determine web access history for each IP address, sorted by IP, with timestamps

- Run command on each line of a text file, increment a counter, and show line number with context

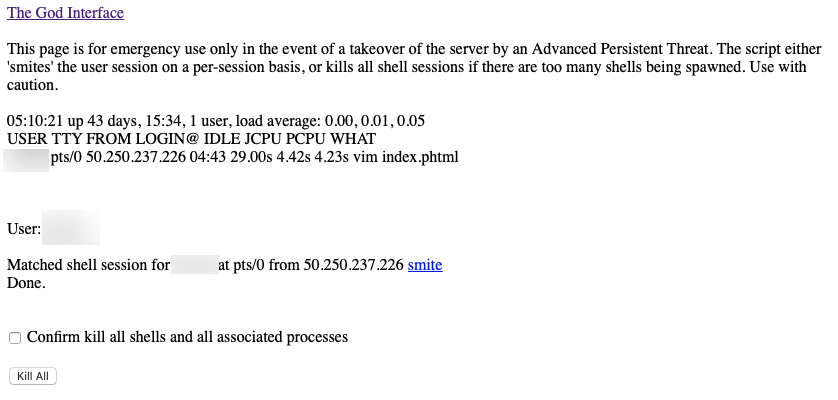

- The God Interface

- Instant email notification of successful SSH logins

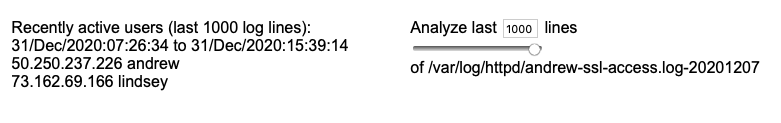

- List recently active users in a secure web portal

- Inline output of website visitor statistics in PHP

- Extract IP address from SSH/SFTP log and report matches in web server logs

- Find each visit by a unique IP and show the dates they visited your website

- Monitor a long process and notify when it completes

- Receive alerts if a particular person shows up as a party in Federal court

- Mass rename a list of EML files to put the dates first in the name instead of last

- Look up DNS record for each IP address in webserver log file

- Solving "body hash did not verify" error with DKIM when trying to send attachments from a PHP script

- Install libmilter on Linux

- Create subtitled video with album art and high resolution audio

- Merge, split, and/or Bates stamp PDF files on Linux

- Change a RHEL 7 system from graphical login to shell login, and then start KDE

Random Linux and macOS tips and tricks

by raellic (and various individuals contributing to Stack Overflow). This page started off by accident when it occurred to me to write down my own tips for my personal reference, and it just snowballed from there. Entries are in reverse chronological order (newest first) as I come across new techniques. As of this moment, there are 40 tips completed.

Use find utility to search contents of files with grep, and archive the resulting files

Handy for finding emails that contain phrases you're looking for.

This will put all the matching files into a new zip file.find . -exec grep -l "Taiebat" {} \; | zip ~/temp/Nick-Taiebat-email.zip -@-

This is useful if you need to take part of the output of the find command on Linux and only want to read the last few entries (or any section of the list). This way you don't have hundreds of files to open.

This gives me the last 10 or so emails in the folder and lets me read each one sequentially in Vim. Handy!vim $(sudo find /mnt/raid5/mail/susanna/cur | tail) Edit PDF metadata programmatically

I had no idea this was even possible until I stumbled upon ExifTool, which reads metadata with Perl and updates it from the command line. I use this for my DissoWare suite of tools to add metadata to PDFs generated by gnuplot. Sample that adds author, title, and a URL to a file:

This PHP line runs ExifTool and does what I need. What a great tool, thanks Phil!exec("exiftool -overwrite_original -Title=\"Cash Flow Analysis\" -Author=\"DissoWare\" -Subject=\"https://www.watters.law/tools/DissoWare/graphs/\"" . $filename . " ./graphs/" . $output);Reset an unresponsive baseboard management controller remotely

This problem rarely happens, but sometimes your web interface for your Asus iKVM or Supermicro IPMI will show "session expired" immediately, and then log you out immediately. I checked, and apparently this is due to the BMC running out of space when the System Event Log reaches the capacity of the BMC's limited storage. The problem is what to do when this happens when you are not on-site. Here's your solution: issue IPMI commands to the BMC from within your network to reset the BMC, and then boot the machine like you were trying to do. This achieves several goals, starting with not having to go on site and unplug the power cables (lol). First, log in to your network where you can see the BMC IP address on your LAN. You should already have configured an IP for the BMC, but if not, use Nmap to determine which IP is the BMC. You should get Nmap output showing these ports from an Asus BMC:

Then, here you go:PORT STATE SERVICE 22/tcp open ssh 80/tcp filtered http 427/tcp open svrloc 443/tcp open https 49154/tcp open unknown

The first command should give you some output showing that the BMC is active, like this:ipmitool -U admin -P [IPMI password] -H 192.168.1.6 bmc info

ipmitool -U admin -P [IPMI password] -H 192.168.1.6 bmc reset cold

The second command will actually reset the BMC. This will take a few minutes, and then you can issue a power on command and log in to your machine normally:Device ID : 32 Device Revision : 1 Firmware Revision : 1.32 IPMI Version : 2.0 Manufacturer ID : 2623 Manufacturer Name : Unknown (0xA3F) Product ID : 3971 (0x0f83) Product Name : Unknown (0xF83) Device Available : yes Provides Device SDRs : yes

You could also skip resetting the BMC if you like, but that would not restore the web interface. Optionally, you can set up a SSH tunnel on your local machine to connect to the remote machine, which is helpful because you can use SFTP to directly connect to the remote machine:ipmitool -U admin -P [IPMI password] -H 192.168.1.6 power on

This lets me use SSH or SFTP on the local machine to connect to port 8484 on the local machine, and port 22 on the remote machine, with a tunnel through my web server. I had to do this recently when I forgot to upload a few files to my law firm portal and I could not make it to the office just to do this. Very handy!ssh -L 8484:192.168.1.7:22 [username]@www.andrewwatters.comBlock scam emails claiming to be you

Add to /etc/postfix/header_checks:

/^From:.{0,2}((Andrew Watters)|(Andrew G\. Watters)|("Andrew Watters")|("Andrew G\. Watters"))(?!((.{0,3}andrew@andrewwatters\.com.{0,3})|(.{0,3}andrew@raellic\.com.{0,3}))).{0,256}$/ REDIRECT raellic@mail.andrewwatters.comThis is a "negative look-ahead" that matches my name when NOT followed by my actual email addresses. It redirects scam emails claiming to be me to my own account, so that the messages don't even go to my employees. This has been an issue recently and I finally resolved it, I think. You also should change /etc/postfix/main.cf to only apply this header check to the main message, not any attachments. That way you can forward the scam messages to warn people or to abuse@google.com:

This turns off header checks for attached messages and attachments, so that you don't trigger the action when forwarding a scam message.header_checks = pcre:/etc/postfix/header_checks nested_header_checks = mime_header_checks =Determine view count from particular IP address in server logs

sudo find /var/log/httpd -name "andrew*" -exec grep -P "GET \/ " {} \; | grep -v "bot" | grep "[0-3][0-9]\/Feb" | sort -t ' ' -k 4 | awk -F ' ' 'NR>0{arr[$1]++}END{for (a in arr) print a, arr[a]}' | sort -t ' ' -k 1Super handy for figuring out which anonymous visitors visit the index page of your website the most. This one finds all unique visitors for February and shows view counts. Change as needed.

Read the New York Times without subscribing

curl https://www.nytimes.com/2023/08/06/world/europe/putins-forever-war.html | lynx -stdinUntil recently, it was possible to read the New York Times directly with Lynx, the text-only browser. They seem to have finally caught on 20 years later, and they now require Javascript to be enabled and ad blockers to be disabled in order to view any article. Enter cURL. With cURL, you simply pipe its output to Lynx and achieve the same result. Enjoy!

Create contact sheet with ImageMagick including EXIF information

montage -tile 3x11 -verbose -geometry 30% -border 10 -label '%f %wx%h %C %Q\%\n%[EXIF:Model]' *.JPG sheet.jpgThis takes a folder full of images, resizes them to 30% of original size, labels them with filename, dimensions, compression algorithm, quality level, and camera make/model, and then creates a tiled contact sheet. Voila:

Split gigantic text file into pages based on user-specified record separator

cat ../MIOG-pages.txt | awk -v RS="<page>" 'NR > 1 { print RS $0 > "MIOG-" (NR-1) ".php"; close("MIOG-" (NR-1) ".php") }'This splits a file based on chunks of text that start with "<page>" and creates as many individual files as there are pages. Very handy!

Test email deliverability with OpenSSL instead of Telnet

When sending email to people who don't disclose their email addresses online, I often will guess their address and then test it with Telnet to port 25 of their email server. This has several downsides, such as using plaintext that anyone can see and that leaves a permanent record of what data were transmitted. So now I use OpenSSL to do this, which has the added benefit of giving security and configuration information about the subject email server. Check out this session, in which I send email claiming to be from a virtual private server to my own email server:

awatters@Andrews-MBP-3 ~ % openssl s_client -starttls smtp -connect mail.andrewwatters.com:25 CONNECTED(00000005) depth=3 C = PL, O = Unizeto Technologies S.A., OU = Certum Certification Authority, CN = Certum Trusted Network CA verify return:1 depth=2 C = US, ST = Texas, L = Houston, O = SSL Corporation, CN = SSL.com Root Certification Authority RSA verify return:1 depth=1 C = US, ST = Texas, L = Houston, O = SSL Corporation, CN = SSL.com RSA SSL subCA verify return:1 depth=0 CN = andrewwatters.com verify return:1 --- Certificate chain 0 s:/CN=andrewwatters.com i:/C=US/ST=Texas/L=Houston/O=SSL Corporation/CN=SSL.com RSA SSL subCA 1 s:/C=US/ST=Texas/L=Houston/O=SSL Corporation/CN=SSL.com RSA SSL subCA i:/C=US/ST=Texas/L=Houston/O=SSL Corporation/CN=SSL.com Root Certification Authority RSA 2 s:/C=US/ST=Texas/L=Houston/O=SSL Corporation/CN=SSL.com Root Certification Authority RSA i:/C=PL/O=Unizeto Technologies S.A./OU=Certum Certification Authority/CN=Certum Trusted Network CA --- Server certificate -----BEGIN CERTIFICATE----- MIIIrTCCBpWgAwIBAgIQb39rYSJwY8v6fGWwn1ukwDANBgkqhkiG9w0BAQsFADBp MQswCQYDVQQGEwJVUzEOMAwGA1UECAwFVGV4YXMxEDAOBgNVBAcMB0hvdXN0b24x GDAWBgNVBAoMD1NTTCBDb3Jwb3JhdGlvbjEeMBwGA1UEAwwVU1NMLmNvbSBSU0Eg U1NMIHN1YkNBMB4XDTIzMDEwNTE1MjI1M1oXDTI0MDEwMzE1MjI1M1owHDEaMBgG A1UEAwwRYW5kcmV3d2F0dGVycy5jb20wggIiMA0GCSqGSIb3DQEBAQUAA4ICDwAw ggIKAoICAQC4guqvUsdlj2fOPLNEC9BIOaplOmZiZrlqay+8sPX/92G6SN0Vsdkd 7ekmJ/v3JBBC2ugEn2UTgMNz+Ec31rA0/Q1u+da3+apZpjJ2mDu2a0Ap9FllVhF9 YDj3wY/xJU3kz3jzd2SWKrlo0EQnU0bClZ/1Rp4QU+WYUfBtOTHCj5sMhovTAvVl XM6nEHLK98omvwch/A0vza5m8Uq4xbjNE4wYe6AbNlLX+PfLO6y1cHbKOroZGrdN k0kjjw433fZ/lS3uOFbLzC1P5dlyz+oq11wXQmf38hFsXO3mlPL9MzBY4w0jTi7C iT/RhlU+YrTrWIDq8lTnFvvoJ5qe1/mGRZNJD+pkKaGRsNAVgEzrbUKRz2scQmnc e/1saj6HQKzAi1Pzmv2W58EgXbnZv2DyWuK6E7d2EmiBm1kUh/hlCSPfkcSPmTNt lgO5U0rGYS1Deu62S1ygtBCT1JuEixOPQJz+YOQfGXASrFzMJjqEmiE9qCBaSX7i DDd38og/iWhArkCC8CvGgvfF8blrXm9oUsPWuVwT/Cu8qEymrlSTC3lGWqLQKze+ jBZl3c8BiMinUqb6vX4Ff82R6EGQ6KYXsovpuSoler17K794xlpMIrV1Ey1uuHag XXn15duFr0U98D6vb43GX2gMysxT8yn46mldtyHkFL1NLixpT2AVjwIDAQABo4ID nDCCA5gwDAYDVR0TAQH/BAIwADAfBgNVHSMEGDAWgBQmFH7g3Nem9+LUBCffYfHC 7OcyyjByBggrBgEFBQcBAQRmMGQwQAYIKwYBBQUHMAKGNGh0dHA6Ly9jZXJ0LnNz bC5jb20vU1NMY29tLVN1YkNBLVNTTC1SU0EtNDA5Ni1SMS5jZXIwIAYIKwYBBQUH MAGGFGh0dHA6Ly9vY3Nwcy5zc2wuY29tMIGGBgNVHREEfzB9ghFhbmRyZXd3YXR0 ZXJzLmNvbYILKi5zbGFzaC5sYXeCEyouYW5kcmV3d2F0dGVycy5jb22CESoudGlu YXdhdHRlcnMuY29tgg0qLnJhZWxsaWMuY29tghIqLmRldmFocXVhcnRldC5jb22C ECouamVud2F0dGVycy5jb20wUQYDVR0gBEowSDAIBgZngQwBAgEwPAYMKwYBBAGC qTABAwEBMCwwKgYIKwYBBQUHAgEWHmh0dHBzOi8vd3d3LnNzbC5jb20vcmVwb3Np dG9yeTAdBgNVHSUEFjAUBggrBgEFBQcDAgYIKwYBBQUHAwEwRQYDVR0fBD4wPDA6 oDigNoY0aHR0cDovL2NybHMuc3NsLmNvbS9TU0xjb20tU3ViQ0EtU1NMLVJTQS00 MDk2LVIxLmNybDAdBgNVHQ4EFgQUYh9RmVfj1NzctA7U2odPSq6vtYwwDgYDVR0P AQH/BAQDAgWgMIIBgAYKKwYBBAHWeQIEAgSCAXAEggFsAWoAdwA7U3d1Pi25gE6L MFsG/kA7Z9hPw/THvQANLXJv4frUFwAAAYWCkFi9AAAEAwBIMEYCIQD61Zsa2b2k SdWEn2JUyI18z6uH8knY+LkQhdqWX2Pk8wIhANgt1lcO+hUzn3EEnSEwfnG/P9Wt h757AUijSDb9C1h0AHYA7s3QZNXbGs7FXLedtM0TojKHRny87N7DUUhZRnEftZsA AAGFgpBYvgAABAMARzBFAiA+DXOlmYCPj47t/0Ou/1TBDt+1AuYH0K0ybJjszYnD 4QIhAP4SEhmJJ+Kaq6BnoW5RNdLVQylaPhsAC+mtot3lC6K/AHcAb1N2rDHwMRnY mQCkURX/dxUcEdkCwQApBo2yCJo32RMAAAGFgpBY6wAABAMASDBGAiEA4oFBS51v Z4YexduKDdOU3zPKvjTpdyfdsmOgzovKHK4CIQDtgI70lEuGAifbD9cy20jVZbsu E6B9v8k7icBmE70f3TANBgkqhkiG9w0BAQsFAAOCAgEAaMRjorP8Y7NfSRhTqtC8 yXzVi1u7Z5yFzDesudvn+WFrkzNPrhkLh7voRUlt7WtfpQCQOxXxm9oLhcF2bOl5 YbhJ6XBsZw7qj6VyW8aNi756dfrzjaGdXk+LWcVW8be8zZdY0yE+CsMl+BubaN2H YsQRdmi5SUhq7Q4AhQxoF0LbqgTU2OH8v3gDFdk8Z+akiV5rbCbR+6WiHsGDHpyY EE7iftwb8/yc5mXxqckCq+4qA+x0r9mLWv+jbk41zLuPt9JaM3RzxLBi44rTUcKu vr/H/CKIV0sb8gYF9dC8UcGKEcBV+v7qhSVr3yZuo7Y5L1Bs8bAr0gv6oQqrh8Ti 1VeMq9VnPSoeCbz7cFyrUSMmuYaZ5/7ZWpeRQk13VzqtEoalZWY/MLIfxJlfOoOF VixpPTJZJm2gb99fbZwhfj8J4sgmeUC2YAbyCzy0m4yuPDzfg8+++kg8FhRV+Dle 3MlBdaB5PG8J9lRHl4WdKkZwG1jtimvKg9tkjK0NXKexcwer2A+Hjt+tShb5xNce MWJBS4pROOJek271qm+wuyk+L00gCZ2rGIn33OuzewGHV0g9XQpSuXSwtUXtzCbK dYUI6ngwK3xfn1UvV7EkOBQ71ouHGCZ8UEy7gQ0Opb0NoBr1JzOEeBcXfR+6z10X n05QrlHjmLWordt1/2tgRwk= -----END CERTIFICATE----- subject=/CN=andrewwatters.com issuer=/C=US/ST=Texas/L=Houston/O=SSL Corporation/CN=SSL.com RSA SSL subCA --- No client certificate CA names sent Server Temp Key: ECDH, X25519, 253 bits --- SSL handshake has read 6482 bytes and written 316 bytes --- New, TLSv1/SSLv3, Cipher is ECDHE-RSA-CHACHA20-POLY1305 Server public key is 4096 bit Secure Renegotiation IS supported Compression: NONE Expansion: NONE No ALPN negotiated SSL-Session: Protocol : TLSv1.2 Cipher : ECDHE-RSA-CHACHA20-POLY1305 Session-ID: 8F74B48575BB67C87EC5516294BDBBB55FD7464C6979AF44F07C09F973E47038 Session-ID-ctx: Master-Key: 079ADB39E794D816C55C8ED0D6AAC3C74A1936256E12FB19C533BF0E04279AFBA2EA5904F4E627463EB63835AFF72AEB TLS session ticket lifetime hint: 7200 (seconds) TLS session ticket: 0000 - d0 b2 36 43 b2 9c 1c d9-e7 68 ff d6 2e 89 fd 75 ..6C.....h.....u 0010 - f5 19 ba f5 24 d8 37 57-2a da 46 b4 e6 41 6d fe ....$.7W*.F..Am. 0020 - 14 1b 05 da 0e b9 31 8f-d7 44 46 86 82 1e 21 d8 ......1..DF...!. 0030 - 37 3b 70 d8 0e 0e 1d 8b-1e 5b 5b bb 80 c5 51 49 7;p......[[...QI 0040 - db bd 54 b9 76 55 7e 71-62 7c 3a af 5f 5a 12 99 ..T.vU~qb|:._Z.. 0050 - 70 ba b4 48 fe 2b f5 5c-ef ae ab 20 32 e3 84 f2 p..H.+.\... 2... 0060 - b4 6c 63 de 05 ac 95 56-d4 ff 49 90 94 8e 47 27 .lc....V..I...G' 0070 - 0c 11 2e 39 cf 94 b4 65-d4 ab 07 85 c0 94 94 4d ...9...e.......M 0080 - 55 f1 69 e7 a5 ed d0 2e-26 d7 97 50 93 e4 b0 ea U.i.....&..P.... 0090 - 75 0c 6a 1d db 80 1a 64-22 1b 33 91 07 a4 18 06 u.j....d".3..... Start Time: 1678885386 Timeout : 7200 (sec) Verify return code: 0 (ok) --- 250 SMTPUTF8 helo ps354511.dreamhostps.com 250 mail.andrewwatters.com mail from: test@ps354511.dreamhostps.com 250 2.1.0 Ok rcpt to: andrew@andrewwatters.com 250 2.1.5 Ok data 354 End data with. From: test@ps354511.dreamhostps.com To: andrew@andrewwatters.com Subject: test hello . 250 2.0.0 Ok: queued as 1BCA1C042476 quit 221 2.0.0 Bye closed Interestingly, DreamHost does not have any SPF or DMARC records for this VPS, which means I can impersonate it from any IP address (sad). The chances of someone doing so are low, but it could be easily automated-- they should fix that.

-

Add to root's crontab:

Extremely useful for retaining data for compliance purposes! I will extend this in the future to create automatic web server backups that sync to my failover location.0 1 * * * tar -cvf /mnt/raid5/storage/backups/mail.tar-`date +\%Y\%m\%d` /mnt/raid5/mail > /mnt/raid5/storage/backups/log 2>&1 ; echo "Email backup completed." | mail -s "Email backup results" andrew@andrewwatters.com 0 5 * * * find /mnt/raid5/storage/backups/mail.tar* -mtime +6 -type f -delete 0 5 * * * sshpass -p "[redacted]" scp /mnt/raid5/storage/backups/mail.tar-`date +\%Y\%m\%d`* [redacted]@[redacted]:~/backups/ Use Pipe Viewer to see progress on .tar.gz archives

Progress bar for tar, very simple.tar cf - 'Outlook Data File' -P | pv -s $(du -sb 'Outlook Data File' | awk '{print $1}') | gzip > backup.tar.gz

Extract frames from high resolution video as PNG stills with timestamps

Adapted from this helpful tip on StackExchange.

Extracts one still every second in a 30 fps video and writes it to a lossless PNG file, with the filename and a timestamp centered at the bottom, and the frame number as the output filename. Very handy!ffmpeg -skip_frame nokey -i ../Massachi-6K.mp4 -vsync 0 -frame_pts true -vf "drawtext=font='Arial':fontcolor=white:fontsize=25:x=(w-tw)/2:y=h-th-10:text='Massachi-6K.mp4 \ ':timecode='00\:00\:00\:00:rate=30" frame-%05d.pngConvert HTML tags to HTML entities in code blocks

In vim, it's the following commands:

This substitutes tags on the same line and asks for confirmation before changing. Critical because changing HTML tags to HTML entities is a very tedious and error-prone process. If you have more than one line, you can set the range or do the entire file if you like. I personally do opening tags and closing tags separately just in case, but I will add a one-liner in the near future.:s/</\</gc

:s/>/\>/gcCreate auto-updating table of contents in any HTML/PHP file 🔥

Hot! This may be my top tip of all time-- huge time saver! Extremely handy when you are just adding to an existing list and can't possibly keep track of all the items. I use this to generate the "live" table of contents at right as well as the <a name="n"> tags in the body. Check this out:

It searches the file for list items outside the Table of Contents (which would be a recursion if I didn't eliminate it), drops the formatting, and adds new list links to each item. You'll need to adapt it to your formatting and style...here, I am consistent with my formatting of list items, so it works well.passthru('let count=0; cat index.php | grep -v "Table of Contents" | grep "<li>" | while read LINE; do let count=count+1; echo $LINE | sed "s/<li><p><strong><a name=\".*\">//" | sed "s/<\/strong><\/p>//" | sed "s/^/<li><a href=\"#$count\">/" | sed "s/$/<\/a><\/li>/"; done; let count=0; cat index.php | grep -v "Table of Contents" | grep "<li>" | while read LINE; do let count=count+1; TIP=`echo $LINE | sed "s/^<li><p><strong>//" | sed "s/<\/strong><\/p>//" | sed "s/<a name=\"[0-9]\+\">//"`; sed -i "s|<a name=\"[0-9]\+\">$TIP|<a name=\"$count\">$TIP|" index.php; done;');What would be super cool is sorting the items by popularity instead of age. That's going to be a tough one because I can't track "#" clicks without a Javascript to count them. Challenge accepted.

Prefer IPv4 when sending email with Postfix

By default, Postfix prefers IPv6. The problem is that if you have a smaller IPv6 prefix than a /64, such as a /112, Google apparently counts your network neighbors' email volume in the same /64 range against your /112 IPv6 address range. I see where they are coming from with this, in that there are about a billion IPv6 addresses in a /112 prefix and it would be impossible to keep track of individual IPv6 addresses. But the problem is that when being efficient with IPv6, you get blocked with zero justification. I switched to IPv4 preferred using my "seasoned" IPv4 address that has been in use for the last 18 months, and I seem to have stopped having problems delivering to Google users. Your mileage may vary. Add this to /etc/main.cf:

smtp_address_preference = ipv4Restrict SSH logins to specific users only

Add this line to the end of /etc/ssh/sshd_config:

This will only allow SSH and SFTP for the specified users instead of anyone. That way if one of your users exposes their password, at least someone can't exploit it to log in. Instead, the intruder will be restricted to only reading the user's email (lol). Be sure to update the /etc/fail2ban/jail.conf file to set ignoreip on your office, just to be sure that accidentally repeated failed logins by employees do not trigger fail2ban.AllowUsers username1 username2 username3Configure Fail2Ban to reject all users who fail to log in when trying to send email

This is a good one for when you run your own email server and have legitimate users logging in to send email with SASL authentication. When you allow SASL for relaying email, you'll get limitless spammers trying to log in with random user names and passwords to send email through your server. To prevent that, use fail2ban with a postfix-sasl jail (in the default configuration, you just add "enabled=true" to that section of jail.conf). Note: always use outbound rate limiting when running your own email server so that a compromised user account cannot exceed the rate limit. I personally set the rate limit at three messages per minute, just in case I want to queue all my email windows and hit send en masse. A regular user should never exceed this limit, and I almost never would.

This command bans the entire PPPoE network of Rostelecom (65,536 addresses), which is necessary because dialup users in Russia are apparently the biggest fake login customers.sudo fail2ban-client -vvv set postfix-sasl banip 77.40.0.46/16Configure Postfix to reject all private sector .ru domains

Add this to your /etc/postfix/access file (note: /etc/postfix/main.cf must be set to use PCRE on the access file):

This one is handy due to the amount of spam I get concerning Russian brides as well as phishing efforts. Uses a "negative look-behind" in Perl (try out this awesome tool), which means that it only matches .ru when not preceded by mil, ac, gov, or edu. Matches "mail.ru" but not "mil.ru" for example, which is the desired behavior. I have this one on the off-chance that someone from a Russian university or government institution emails me, perhaps in response to my /syn page. I've confirmed that this works properly by reviewing my maillog, so feel free to use it.# Block the entirety of Russia except for government domains 6 18 2022 /(?<!mil|ac|gov|edu)\.ru$/ REJECT We have made the difficult decision to reject all private sector .ru domains due to the amount of spam. Government users or educational senders may call to confirm access, see https://www.andrewwatters.com/#contactSearch Maildir for particular string in emails

This one goes through the 70,000+ emails in my sent mail folder and finds all the ones I sent to my wife's work email, with 5 lines of context (the -B flag to grep). Very handy and something you can't do on Google, although I would prefer to use Dovecot's search index in the future to be more efficient. I much prefer Maildir to mbox format because getting lock on a 25 GB mbox file is harder than on a single 2 KB email, but the downside with a lot of messages is that you can't simply grep through the files directly due to the argument list being too long.find . -exec grep -B 5 "To: tinachung.re" {} \;Create sitemap for 404 page using command line tools 🔥

I started using /missing.php to be more user-friendly when URLs are broken. But I wanted to include a sitemap for people to get back where they were going. Here you go:

wget --spider --force-html -r -l2 https://www.andrewwatters.com 2>&1 | grep "http" | cut -d " " -f 4 | grep -v '\(\.png\)\|\(\.jpg\)\|\(\.pdf\)\|\(\.svg\)\|\(\.js\)\|\(.jpeg\)\|\(.txt\)\|\(.css\)\|\(.JPG\)\|\(.mp4\|.MP4\|.MOV\|.mov\)\|\(.xlsx\)\|\(\/site.webmanifest\)' | sed "s/https\:\/\/www.andrewwatters.com/<a href=\"/g" | uniq | grep . | uniq | sort -f -k 1.2 | grep -v "http" | sed "s/\/$/\/\">/g" | awk -F "\"" '{print $1,"\"",$2,"\"",$3,$2,"</a><BR>"}' | sed 's/ //g' | sed 's/ahref/a href/g'I could have been more efficient here, but it is what it is. This will print something like this, which is exactly what I needed:

/

/congress/

/dashboard/

/DreamGirl/

/email/

/face/

/FBI/

/forms/

/hall-of-shame/6x7/

/hall-of-shame/6x7/motion/

/hall-of-shame/binglong-yang/

/hall-of-shame/mahsa-parviz/

/hall-of-shame/timeshare-rico/

/investigations/

/law/

/law/acosta/

/law/oracle/

/law/V5/

/linux/

/matrices/

/network/

/network/100G/

/primes/

/primes/images/

/privoxy/

/reviews/keyboard/

/reviews/typewriter/

/store/

/SW/

/syn/

/the-forever-war/

/vision/

/wmap/

Mass edit files in place with changes to script embedded in file

I mass renamed my .phtml files to .php during an update. That left about 64 occurrences of a particular function in my sites, as well as hundreds of links to .phtml files. Check this out:

This edits all the files in place to correct the file name extension, leaving everything else untouched. You can do the same for links. Otherwise, it would be impossible to fix all the links!find ./* -name "*.php" -type f -exec sed -i "s/filemtime(\"index\.phtml\")/filemtime(\"index\.php\")/g" {} \;Embed DDOS-proof web hit counter in web page

grep "GET \/ " /var/log/httpd/andrew*access* | cut -d " " -f 1 | cut -d ":" -f 2 | wc -l | sed ':a;s/\B[0-9]\{3\}\>/,&/;ta' > /var/www/andrew/stats.txt; echo "/index" >> /var/www/andrew/stats.txt; grep "GET \/law\/ " /var/log/httpd/andrew*access* | cut -d " " -f 1 | cut -d ":" -f 2 | wc -l | sed ':a;s/\B[0-9]\{3\}\>/,&/;ta' >> /var/www/andrew/stats.txt; echo "/law" >> /var/www/andrew/stats.txt; grep "GET \/syn\/ " /var/log/httpd/andrew*access* | cut -d " " -f 1 | cut -d ":" -f 2 | wc -l | sed ':a;s/\B[0-9]\{3\}\>/,&/;ta' >> /var/www/andrew/stats.txt; echo "/syn" >> /var/www/andrew/stats.txt;This is a great one and an improved version of the one below. Add this to your crontab and run the command every minute. That way no one can lock up your server-- the command takes about 500 milliseconds with a couple hundred megabytes of log files. Success!

Determine web access history for each IP address, sorted by IP, with timestamps

New and improved:

sudo find /var/log/httpd/ -name "*andrew*" -exec grep "\/6x7\/ " {} \; | cut -d "-" -f 1,2,3 | sort -k 1 | sed "s/\[//" | sed "s/- - /- /g"This one works on SELinux systems where grep may not be allowed to use wildcards in privileged directories, and also works with IPv6. This searches all of my web server logs and produces similar output to the below example, though I've chosen to sort by IP address instead of date:

69.160.160.60 - 16/Mar/2023:15:04:45 71.36.217.2 - 17/Mar/2023:14:56:53 71.36.217.2 - 17/Mar/2023:18:13:21 73.31.252.175 - 23/Mar/2023:19:32:39 74.51.155.55 - 27/Mar/2023:12:07:31 74.51.155.55 - 27/Mar/2023:12:12:18 75.221.96.253 - 06/Apr/2023:12:42:56 76.202.243.201 - 02/Apr/2023:16:12:16 85.188.1.90 - 16/Mar/2023:08:31:53 85.76.163.193 - 16/Mar/2023:08:15:08 85.76.163.193 - 16/Mar/2023:08:27:08 88.99.250.124 - 25/Mar/2023:19:08:15 88.99.250.124 - 25/Mar/2023:19:31:16 91.240.118.252 - 18/Mar/2023:08:23:45 91.240.118.252 - 18/Mar/2023:17:05:46 94.130.219.240 - 09/Apr/2023:16:21:03 96.71.249.81 - 21/Mar/2023:11:52:13 97.113.131.70 - 29/Mar/2023:13:12:08 99.50.128.161 - 28/Mar/2023:08:54:22 99.89.37.180 - 15/Mar/2023:10:29:23Prior version: I need to update this to be compatible with IPv6 now that I also serve web pages over IPv6. But for now, here it is:

grep "GET \/syn\/ " /var/log/httpd/andrew*access* | cut -d " " -f 1,4 | cut -d ":" -f 2,3,4,5 | sed "s/\[//g" | sort -k 1 > ~/testSample output:

95.216.96.170 10/Nov/2021:12:49:47 95.216.96.170 15/Aug/2021:00:55:18 95.216.96.170 25/May/2021:03:22:44 95.216.96.170 26/Feb/2021:07:31:56 95.216.96.242 09/Apr/2021:03:20:43 95.216.96.242 12/Oct/2021:14:26:58 95.216.96.242 25/Jun/2021:10:56:46 95.216.96.242 26/Feb/2021:12:42:44 95.216.96.244 09/May/2021:03:40:59 95.216.96.244 09/Nov/2021:09:52:37 95.216.96.244 13/Aug/2021:21:23:57 95.216.96.244 13/Sep/2021:10:53:24 95.216.96.244 24/Apr/2021:03:48:56 95.216.96.244 27/Mar/2021:10:12:56 95.216.96.244 28/Jan/2021:20:07:30 95.216.96.245 25/Jun/2021:07:15:02 95.216.96.254 08/Apr/2021:21:07:26 95.216.96.254 09/Jun/2021:15:18:03 95.216.96.254 12/Mar/2021:17:07:54 95.216.96.254 19/Jan/2021:03:40:15 95.216.96.254 20/Feb/2021:04:45:35 95.216.96.254 30/Jun/2021:01:31:44Run command on each line of a text file, increment a counter, and show line number with context

[[redacted] ~]$ let count=0; while read line; do let count=(count + 1); echo "Line: $count IP: $line"; geoiplookup $line; done < access.txt | grep -B 1 Russia Line: 237 IP: 195.201.166.168 GeoIP Country Edition: RU, Russian Federation -- Line: 269 IP: 212.192.246.107 GeoIP Country Edition: RU, Russian Federation -- Line: 566 IP: 77.88.5.40 GeoIP Country Edition: RU, Russian Federation Line: 567 IP: 77.88.5.50 GeoIP Country Edition: RU, Russian Federation -- Line: 612 IP: 93.158.161.33 GeoIP Country Edition: RU, Russian Federation Line: 613 IP: 93.158.161.58 GeoIP Country Edition: RU, Russian Federation-

Emergency anti-lockout PHP script "smites" individual shell sessions or kills all shell sessions and all associated processes. Assumes that your Apache server has the privileges necessary to execute root commands, so obviously don't allow non-privileged users to put this (or other such scripts) on the system. This is not intended for a shared system, and it can be prevented by disabling passthru, exec, etc. in the php.ini file. Use in conjunction with tip no. 2 below if desired. Version one of the script just kicks the user; the next version will ban them as well using fail2ban, and will email the user and/or administrator a password reset link for the kicked user. Output:

Copy/paste this into a PHP script called "kill.php" and place it in a secure location protected by a .htaccess file, at a minimum. Note: this version kills all user processes for the logged in users, so if you have any system processes running under that user, it will capture those as well. That would be useful if an APT started a bunch of processes and then logged out, then logged back in again. That's why you need to be careful. Next version will have the option of only killing processes with PID greater than the user's shell login so that no earlier processes are terminated. But that could limit the effectiveness of the tool if there are repeated logins, among other issues, so I will note that in the options.<?php echo "<a href=\"./kill.php\">The God Interface</a><BR><BR>This page is for emergency use only in the event of a takeover of the server by an Advanced Persistent Threat. The script either 'smites' the user session on a per-session basis, or kills all shell sessions if there are too many shells being spawned. Use with caution.<BR><BR>"; // show shell users passthru("w | perl -pe 's/\n/<BR>/g'"); echo "<BR>"; $action = stripslashes(strip_tags($_POST['action'])); if ($action == "") { $action = stripslashes(strip_tags($_GET['action'])); } if ($action == "killall") { if (stripslashes(strip_tags($_POST['confirm'] != "1"))) { die("Confirmation is required. Try again."); } echo "Killing all shells and all associated processess..."; } if ($action == "smite") { $shell = stripslashes(strip_tags($_GET['shell'])); echo "Will smite shell $shell...<BR>"; passthru("ps ax | grep '$shell' | grep -v 'grep' | perl -pe 's/\n/<BR>/g'"); exec("ps ax | grep '$shell' | grep -v 'grep'",$pids); foreach ($pids as $key => $value) { $pid = explode(" ",$value); echo "Process " . $pid[0] . " ($value)"; passthru("kill -9 " . $pid[0]); echo "...done.<BR>"; } } // put shell users in array exec("w",$output); foreach($output as $outputkey => $outputvalue) { // condense double spaces to single spaces $output[$outputkey] = str_replace(" "," ",$output[$outputkey]); // put line into array $line = explode(" ",$output[$outputkey]); // debug // echo "<BR>" . print_r($line) . "<BR>"; $user = $line[0]; if (!strstr($user,"USER") and strlen($user) > 0) { echo "<BR><BR>User: $user<BR><BR>"; if ($action == "killall") { passthru("pkill -9 -u $user"); echo "Killed all processes for user $user...<BR>"; } if ($action != "killall" and strstr($output[$outputkey],"pts/")) { echo "Matched shell session for $user at " . $line[1] . " from " . $line[3] . " <a href=\"./kill.php?action=smite&shell=" . $line[2] . "\">smite</a><BR>"; } echo "Done."; ?> Instant email notification of successful SSH logins

This is a lifesaver if someone guesses your password or steals a SSH key. Original post is from Stack Exchange, I made a couple of small changes.

Add the following to your .bash_profile:

IP="$(echo $SSH_CONNECTION | cut -d " " -f 1)" HOSTNAME=$(hostname) NOW=$(date +"%e %b %Y, %a %r") echo 'Someone from '$IP' logged into '$HOSTNAME' as you at '$NOW'.' | mail -s 'SSH Login Notification' user@example.com

Replace "user@example.com" with your preferred email address or your text message address from your mobile phone provider, or both (separate multiple addresses with a space). Now set your phone to notify you when emails come through from your server's email address. On the iPhone, you add the email address to VIP's and it automatically notifies you. This way you find out within seconds of any successful login, instead of days, and you can take prompt countermeasures-- including resetting passwords if the attacker gets as far as changing your root password. This also prevents an attacker from completely covering their tracks since the script executes the first time they log in, before they know anything about the server. Even if they see the email in the mail log, they might not realize they got caught unless they review .bash_profile as well. I may add a one-click ban feature that bans unrecognized IP's with a custom URL that executes fail2ban and resets the user's password. I may also set windows of time, such as 10 p.m. to 6 a.m., when logins from unexpected IP addresses will automatically trigger a password reset. Still working on it. Note that this method notifies upon shell logins, but not SFTP logins, which is the desired behavior for most people. I plan to integrate tip no. 1 above with this one in the near future.

List recently active users in a secure web portal

<script>function slider() { document.getElementById('rangevalue').value = document.getElementById('slider').value; window.location.href="./?slider=" + document.getElementById('slider').value; }</script> $lines = stripslashes(strip_tags($_GET['slider'])); if ($lines == "") { $lines = 500; } $logfile = exec('find /var/log/httpd/andrew-ssl-access* -exec ls -1t "{}" + | head -n 1'); passthru('tail -n ' . $lines . ' ' . $logfile . ' | grep "andrew\|lindsey\|arielle\|lauren" | cut -d " " -f 4 | sort | head -n 1 | sed "s/\(\]\|\[\)//g"; tail -n ' . $lines . ' ' . $logfile . ' | grep "andrew\|lindsey\|arielle\|lauren" | cut -d " " -f 4 | sort | tail -n 1 | sed "s/\(\]\|\[\)/to /g"; echo "<BR>"; tail -n ' . $lines . ' ' . $logfile . ' | grep "andrew\|lindsey\|arielle\|lauren" | cut -d " " -f 3,1 | sort -k 1 | sort -u | grep -v "-" | perl -pe "s/\n/<BR>/g"'); echo "Analyze last <input type=\"text\" id=\"rangevalue\" size=\"4\" value=\"$lines\"> lines<BR><input id=\"slider\" type=\"range\" min=\"100\" max=\"1000\" step=\"100\" value=\"$lines\" onmouseup=slider()><BR>of $logfile";Explanation: searches the most recent SSL access log for unique IP's/users during a variable window of time covered by the last X log lines, with the number of lines specified by a HTML5 slider. if you have a web portal secured by .htaccess files, you can easily get the logged-in user names in a PHP global variable: $_SERVER['REMOTE_USER']. This script doesn't require any of that; all you have to do is list your .htaccess users in the grep expression. It is extremely helpful to see the last couple of days of logins using an adjustable slider. It currently looks at the newest log file matching the specified pattern. Next step, I could just cat the .htpasswd file and run each of those names so I don't have to change the hard-code here each time.

Sample outputInline output of website visitor statistics in PHP

You can embed webserver statistics inline for any purpose:

As of this moment, the last 90 days of log files show <strong><span style="color:red;"><?php passthru("grep \" \/syn\/ \" /var/log/httpd/andrew*access* | grep -E -o \"([0-9]{1,3}[\.]){3}[0-9]{1,3}\" | wc -l"); ?></span></strong> visits, of which <?php passthru("grep \" \/syn\/ \" /var/log/httpd/andrew*access* | grep -E -o \"([0-9]{1,3}[\.]){3}[0-9]{1,3}\" | sort -u | wc -l"); ?> were from unique IP's.Explanation: replace the log path with your log path. In this case, I wanted to capture all the visits and all the unique IP's accessing the /syn/ page on my website and output them inline in the web page itself. This worked perfectly and only takes about 190 milliseconds with dozens of megabytes of log files; unless I get DDoS'ed, I am all good here. Yeah, I could improve this by saving the output of the first command and then filtering for unique IP's within PHP, so I will do that next in order to halve the processing time. If you want to get really creative, you can execute this command hourly with cron and just save the results in a text file, then embed them in the page instead. I intend to do that and document it as part of my effort to create a dashboard for my internal use.

Extract IP address from SSH/SFTP log and report matches in web server logs

This is useful if you want to see whether any visitors to your website also attempt to hack your server via SSH. I ran this expecting to find a bunch of matches, but there were literally a couple of matches and they have legitimate explanations.

Method one:

grep -E -o "([0-9]{1,3}[\.]){3}[0-9]{1,3}" /var/log/secure | sort -u | while read -r line; do echo "Processing $line..."; grep $line access* | grep -v "50\.237\."; doneExplanation: searches the most recent SSH log for unique IP's and matches those against web server log files, excluding my own IP address. I ran this and thought that I found one actual hacker, who visited my website and then tried to log in via SSH...but this turned out to be me using SFTP from a client's office...lol.

Method two:

grep -E -o "([0-9]{1,3}[\.]){3}[0-9]{1,3}" iptables-output | grep -v "0\.0\.0\.0" | sort -u | while read -r line; do echo "Processing $line..."; grep $line access*; doneExplanation: if you're running fail2ban, save your iptables output in a text file and replace iptables-output with your file name. Replace access* with your web server access log. The script searches your access logs for any IP addresses that also appear in the fail2ban log or iptables output, and returns any matches. In the future, I plan to add all kinds of analytics, such as the sequence of visits on my website by analyzing timestamps and the path each user takes (useful for the upcoming Andy's Dashboard offering).

Find each visit by a unique IP and show the dates they visited your website

cat andrew-*access* | grep "/linux/ " | cut -d " " -f 1,2,3,4,5,6,7,8 | sort -k 4 -k 2M -k1 -k4 | grep -E "([0-9]{1,3}[\.]){3}[0-9]{1,3}" | sort -k 7Explanation: This is super useful because you can get a report of repeat visitors showing a simple list of accesses to your site, sorted by IP and then in the order of the visits. You'll see patterns that you never would have noticed. This is going to be a wicked addition to Andy's Dashboard once I get that running.

Monitor a long process and notify when it completes

Method one:

result=$(if ps ax | grep -q -v "74189"; then echo "Process done"; else echo "Process pending"; fi;); until (echo $result | grep -v "pending"); do sleep 2; echo $result; done; say "all done"Explanation: replace "74189" with the process ID you want to monitor. This polls the system every two seconds and (on macOS) verbally says "all done" when the process is done. I'll probably ring the terminal bell on Linux. Next, I will do a one-line command that doesn't require finding the process ID first, and will provide the option of email notification if the process takes longer than the specified time.

Method two:

result=$(if ps ax | grep -q -v "300851"; then echo "Process done"; else echo "Process pending"; fi;); until (echo $result | grep -q -v "pending"); do sleep 2; echo $result; done; echo "Process done" | mail -s "Process notification" andrew@andrewwatters.com;Explanation: same as method one, but emails you upon completion. Critically important to this one, the "until" clause must have the -q flag for grep, or else it won't work. I intend to do a one-liner in the near future where you don't have to find the process ID first. Should be straightforward using the cut command, since the process ID is given immediately whenever you use the & operator.

Method three: watch "ps ax | grep tar" and you'll at least have a simple indication that the process you're looking for is still running.

Receive alerts if a particular person shows up as a party in Federal court

curl -v --silent https://ecf.cand.uscourts.gov/cgi-bin/rss_outside.pl 2>&1 | grep "Watters"Here, I search the output of my local U.S. District Court's RSS feed to see the last 24 hours or so of filings and whether I am in them. The above command is manual, which is pointless due to the volume of filings and short window in the RSS feed. Instead, I recommend a twice-daily cron job that emails you if matches are found (because you would want to know as soon as possible):

if curl -v --silent https://ecf.cand.uscourts.gov/cgi-bin/rss_outside.pl 2>&1 | grep -q "Apple"; then echo "Apple found in CAND ECF" | mail -s "CAND RSS results" andrew@andrewwatters.com; else echo not found; fiIn this variant, I email myself if the search term is found so that I can go back and check the particular item manually. The -q flag in grep returns success if the term is found, and null if the term is not found-- useful if there are multiple matches. I've put this in a shell script with some other items and have added a cron job, with email notification suppressed so that I only get emails if the search term is found. I think this is the fastest way to find out whether you or someone whose litigation you want to follow have been sued in Federal court, and it will capture most civil and criminal filings (it won't capture sealed indictments, but if that happens then you have bigger problems). Next on the feature list, I will add context so that I get links to the relevant filings, case number, etc. and can click them directly from the email notice.

Mass rename a list of EML files to put the dates first in the name instead of last

Adapted from a post at Stack Exchange. This is useful when you are exporting hundreds of EML files from Thunderbird, which puts the date at the end instead of the beginning of the file name. Renaming the files en masse lets you sort by date.

Preview:

for file in *.eml; do no_extension=${file%.eml}; the_date=$(echo "{$no_extension}" | rev | cut -d ' ' -f 2 | rev); year=${the_date:0:4}; month=${the_date:5:2}; day=${the_date:8:2}; date_part=${year}-${month}-${day}; filename_part=$(echo "${no_extension}" | cut -d ' ' -f 1-); new_file="${date_part} ${filename_part}.eml"; echo "${file} -> ${new_file}"; doneActual:

for file in *.eml; do no_extension=${file%.eml}; the_date=$(echo "{$no_extension}" | rev | cut -d ' ' -f 2 | rev); year=${the_date:0:4}; month=${the_date:5:2}; day=${the_date:8:2}; date_part=${year}-${month}-${day}; filename_part=$(echo "${no_extension}" | cut -d ' ' -f 1-); new_file="${date_part} ${filename_part}.eml"; mv "${file}" "${new_file}"; doneLook up DNS record for each IP address in webserver log file

Method one:

cat logs/raellic.com/http/access.log | grep "Vision" | grep -E -o "([0-9]{1,3}[\.]){3}[0-9]{1,3}" | nslookup | grep "name ="Returns a list of all the hosts in my server access log that accessed a PDF with Vision in the title.

Method two:

cat logs/raellic.com/http/access.log | grep "Vision" | grep -E -o "([0-9]{1,3}[\.]){3}[0-9]{1,3}" > blah.txt; while read IP; do echo "$IP"; whois "$IP"; sleep 2; done < blah.txt > bluh.txt &Executes whois instead of nslookup. Note that this may result in rate limiting or even a ban if there are too many lines in the log file. I recommend including "sleep 2" in the command so there is a built-in rate limit, but you still need to be careful with the total number of queries.

Solving "body hash did not verify" error with DKIM when trying to send attachments from a PHP script

Wrap the long lines of the Base64-encoded data to 78 characters and break with a newline character (\n):

<? wordwrap($attachment,78,"\n",true); ?>Now your DKIM signature will be valid because the message body is intact when signed.

-

This is really annoying: libmilter is only available if you download the Sendmail source code and manually build it. Here you go:

wget https://www.andrewwatters.com/linux/sendmail-current.tar.gztar -xvzf sendmail-current.tar.gzcd sendmail-8.15.2cd libmilter./Buildmakesudo make installThat's it! Now when you try to install opendkim, you won't get error messages about libmilter being missing.

Create subtitled video with album art and high resolution audio

ffmpeg -framerate 1 -loop 1 -i final-cover.png -i album.ogg -acodec copy -vcodec libvpx-vp9 -crf 20 -b:v 0 -vf subtitles=album.ass -shortest album1.webmThis assumes you already have a high resolution opus/ogg file of the album. The album.ass file is the subtitle file, which can be done using gnome-subtitles or something more advanced such as Aegisub. Further details here. I selected the VP9 codec for compatibility and opus/ogg audio for best quality audio and broad compatibility.

Merge, split, and/or Bates stamp PDF files on Linux

Seriously, this is one of the hardest things I have ever done. Finding the right tool was half the challenge.

I am pleased to recommend CPDF, which is free for personal and non-commercial use, but otherwise does require a license (worth it).

Merge

Merge is the default operation:

cpdf -merge file1.pdf file2.pdf -o file.pdfSplit into n-page chunks

cpdf -split -chunk 20 file.pdf -o piece%%%.pdfUnfortunately, neither CPDF nor PDFTK can apparently split a file based on file size of the resulting chunks. This is extremely frustrating when there are file size limitations on certain courts' electronic filing systems. The solution I use is to count the number of pages (

cpdf -pages file.pdf), divide by the file size in megabytes, split the PDF using that number of pages per chunk, then manually combine the resulting chunks until they are just under the file size I want. Frustrating, and begging for automation. I could probably write a script to do that, but it would be nice if there were a tool already out there.Bates Stamp

This knowledge took me over a year and a half to obtain. I present it here for free to save others time.

cpdf -add-text "%Bates" -bates 1 file.pdf -bottomright 30 -o stamped.pdfChange a RHEL 7 system from graphical login to shell login, and then start KDE

localhost$ sudo systemctl enable multi-user.targetlocalhost$ reboot[After rebooting]

localhost$ startx /usr/bin/startkdeYou would not believe how long it took me to figure that out. If you just do startx, it will freeze your system if there are any problems with GNOME.

© 2025 Andrew G. Watters

Last updated: February 20, 2025 16:49:31